Examining the “Big Requirements Up Front (BRUF) Approach”

BRUF Leads to Significant Wastage

The Chaos Report shares some interesting statistics pertaining to the effectiveness this sort of approach. The Chaos Report, looks at thousands of projects, big and small, around the world in various business domains. The Chaos Study reports that 15% of all projects fail to deliver at all, and that 51% are challenged (they’re severely late and/or over budget). However, the Standish Group has also looked at a subset of traditional teams which eventually delivered into production and asked the question, “Of the functionality which was delivered, how much of it was actually used?” The results are summarized in Figure 1: an astounding 45% of the functionality was never used, and a further 19% is rarely used. In other words, on these so-called “successful” teams there is significant wastage.

Figure 1: The effectiveness of a serial approach to requirements.

This wastage occurs for several reasons:

- The requirements change. The period between the time that the requirements are “finalized” to the time that the system is actually deployed will often span months, if not years. During this timeframe changes will occur in the marketplace, legislation will change, and your organization’s strategy will change. All of these changes in turn will motivate true changes to your requirements.

- People’s understanding of the requirements change. More often than not what we identify as a changed requirement is really just an improved understanding of the actual requirement. People aren’t very good at predicting what they want. They are good at indicating what they sort of think that they might want, and then once they see what you’ve built then can then provide some feedback as to how to get it closer to what is actually needed. It’s natural for people to change their minds, so you need an approach which easily enables this.

- People make up requirements. An unfortunate side effect of the traditional approach to development is that we’ve taught several generations of stakeholders that it’s going to be relatively painless to define requirements early, but relatively painful to change their minds later. In other words, they’re motivate to brainstorm requirements which they think that they just might need at some point, guaranteeing that a large percentage of the functionality that they specify really isn’t needed in actual practice.

- You effectively put a cap on what you will deliver. Very often with a BRUF approach you in effect define the best that will be delivered by the team and then throughout the initiative you negotiate downwards from there as you begin to run out of resources or time.

Why do organizations choose to work this way? Often, they want to identify the requirements early in the lifecycle so that they can put together what they believe to be an accurate budget and schedule. This ignores the fact that in the past that this hasn’t worked out very well (51% of projects are arguably challenged) but I guess hope springs eternal. Yes, the more information that you have the better your budget and schedule can be, but software development is so dynamic that at best all we can do is define a very large range at the beginning of a initiative and narrow it down things progress. Although such an estimates may be soothing to the people desperately trying to manage the team, I personally wouldn’t want to invest much effort in putting this estimate together, particularly when there are better alternatives.

Many organizations tend to treat custom software as if they were purchasing it off the shelf — they treat it as a one time purchase. The reality is that you’re building a system which will be maintained and evolved for many years to come, it’s really a product (involving many releases over time, often implemented as a series of “projects”) that you need to manage, not a project. Therefore, a fixed set of requirements with a budget tied to meeting those requirements is an unrealistic way to work.Many organizations are convinced that software is very hard to change. This is true if it’s difficult to redeploy newer versions of the software, but most organizations these days have automated their release process for the majority of their systems. The data community often convinces themselves that it’s difficult to refactor databases, which is simply not true, or that they must negotiate a one data truth in order to proceed with development (also not true).

Worse yet, some people naively think that developers do their work based on what is in the requirements document. If this was true, wouldn’t it be common to see printed requirements documents open on every programmer’s desk, or displayed in a window on their screens? If you walk around your IT department one day I suspect you’ll only need one hand to keep track the number of people actually doing this.

An interesting question that wasn’t asked, to my knowledge, was how much required functionality wasn’t delivered as the result of a BMUF approach. We have no measure, yet, of the opportunity cost of this approach.

To recap, many organizations are motivated to comprehensively define requirements early in an initiative in order to put together an accurate estimate and schedule. Ignoring the fact that most initiatives are challenged, even when this approach is successful as Figure 1 shows roughly two thirds of your investment is a waste. Although this should be enough to motivate you to change your approach, it just gets worse.

Take an Evolutionary Approach to Development

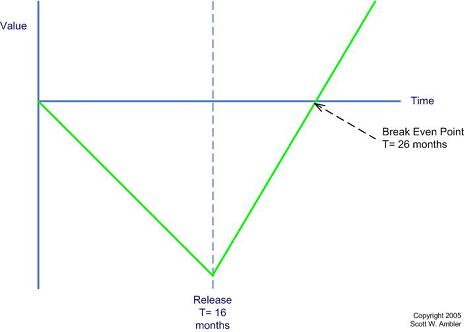

Assuming that you can live with two-thirds wastage, as the majority of organizations seem to do, a serial approach to development is still a poor way to work. Figure 2 depicts the break even graph for a fictional team taking a serial approach to development whereas Figure 3 shows the similar graph for a team taking an evolutionary approach. With an evolutionary approach you work iteratively and deliver incrementally. With an iterative approach, instead of trying to define all the requirements up front, then developing a design based on those requirements, then writing code, and so on you do a little modeling, then a little coding, then some more modeling, and so on. When you deliver incrementally you release the system a piece at a time. As you can see, the serial team developed the entire system over a 16 month period whereas the evolutionary team had an initial release after 8 months, then another 4 months later, then one more after another 4 months.

Figure 2. Break even for a serial project.

Figure 3. Break even for an evolutionary project.

The serial team’s break even point occurs 26 months after the start of the project, whereas the evolutionary team’s achieves break even after 14 months. With the evolutionary approach you not only spend less money at first, you also start receiving the benefits of having working software in production earlier. Better yet, if you develop the highest priority requirements first (more on this in a minute), you gain a higher rate of return on your initial investment with the evolutionary approach. Furthermore, the evolutionary approach is less risky from a financial point of view. Not only does it pay off earlier, always a good thing, you can easily stop development if you realize that an interim release really does deliver the critical functionality that you actually need.

So, to recap once again, a traditional approach to software develop result in roughly two-thirds wastage in delivered software. Worse yet, this approach produces a longer break even point than does an evolutionary approach, increasing the risk to your team. In short, although waterfalls are wonderful tourist attractions they are spectacularly poor ways to organize software development teams.

Consider an Agile Approach

So what is the solution? First, as Figure 4 depicts you should take an agile approach to requirements elicitation where initial requirements are initially envisioned at a very high level and then the details are explored via iteration modeling or via model storming on a just-in-time (JIT) basis. As Figure 5 indicates, for the first release of a system you need to take several days, with a maximum of two weeks for the vast majority of business systems, for initial requirements and architecture envisioning. The goal of the requirements envisioning is to identify the high-level requirements as well as the scope of the release (what you think the system should do). Model storming sessions are typically impromptu events, one team member will ask another to model with them, typically lasting for five to ten minutes (it’s rare to model storm for more than thirty minutes). The people get together, gather around a shared modeling tool (e.g. the whiteboard), explore the issue until their satisfied that they understand it, then they continue on (often coding). Model storming is JIT modeling: you identify an issue which you need to resolve, you quickly grab a few team mates who can help you, the group explores the issue, and then everyone continues on as before. Extreme programmers (XPers) would call modeling storming sessions stand-up design sessions or customer Q&A sessions.

Figure 4. The Agile Model Driven Development (AMDD) lifecycle for software teams (click to enlarge).

Figure 5. The value of modeling.

This streamlined, JIT approach to requirements elicitation works for several reasons:

- You can still meet your “planning needs”. By identifying the high-level requirements early you have enough information to produce an initial cost estimate and schedule.

- You minimize wastage. A JIT approach to modeling enables you to focus on just the aspects of the system that you’re actually going to build. With a serial approach, you often model aspects of the system which nobody actually wants, as you learned earlier.

- You ask better questions. The longer you wait to model storm a requirement, the more knowledge you’ll have regarding the domain and therefore you’ll be able to ask more intelligent questions.

- Stakeholders give better answers. Similarly, your stakeholders will have a better understanding of the system that you’re building because you’ll have delivered working software on a regular basis and thereby provided them with concrete feedback.

Because requirements change frequently you need a streamlined, flexible approach to requirements change management. Agilists want to develop software which is both high-quality and high-value, and the easiest way to develop high-value software is to implement the highest priority requirements first. Agilists strive to truly manage change, not to prevent it, enabling them to maximize stakeholder ROI. Figure 6 overviews the agile approach to requirements change management , reflecting both Extreme Programming (XP)’s planning game and the Scrum methodology. Furthermore, this approach is one of several strategies supported by the Disciplined Agile (DA) tool kit. With this approach your software development team has a stack of prioritized and estimated requirements which needs to beimplemented – co-located teams may literally have a stack of user stories written on index cards. The team takes the highest priority requirements from the top of the stack which they believe they can implement within the current iteration. Scrum suggests that you freeze the requirements for the current iteration to provide a level of stability for the developers. XP recommends that you do not freeze the requirements but if you do change in the middle of the iteration then you must remove an equal amount of work and put it back on this stack. The DAD framework supports both strategies, and more importantly provides guidance for choosing between the two.

Figure 6. An Agile Approach to Change Management.

New requirements, including defects identified as part of your user testing activities, are prioritized by your stakeholders and added to the stack in the appropriate place. Your stakeholders have the right to define new requirements, change their minds about existing requirements, and even reprioritize requirements as they see fit. However, stakeholders must also be responsible for making decisions and providing information in a timely manner.

At first this strategy appears to be a radical approach to requirements management, but it has several interesting benefits:

- Stakeholders are in control of the scope. They can change the requirements at any point in time, as they need to.

- Stakeholders are in control of the budget. They can fund the initiative for as long as they want, to the extent that they want. When you reach the end of the budgeted funds, you either decide to continue funding the effort or you decide to put whatever you’ve got into production.

- Stakeholders are in control of the schedule. See point #2.

- Wastage is minimized. Because the initiative is continually driven by the stakeholders’ priorities, each increment provides the greatest possible gain in stakeholder value. Because stakeholders review each incremental delivery, progress is always visible and it’s unlikely that the team will be sidetracked by unimportant issues or deliver something that doesn’t meet stakeholder needs. In other words, developers maximize stakeholder return on investment.

The only major impediment to this approach is cultural inertia. Many organizations have convinced themselves that they need to define the requirements up front, that they need comprehensive documentation before teams can begin development. They often do this in the name of minimizing financial risk, they want to know what they’re going to get for their money, and as a result incur incredible wastage and actually increase financial risk by delaying the break even point of their deployed system. Choosing to succeed is one of the hardest things that you’ll ever do.