Agile Works: Answering the Question “Where is the Proof?”

|

2. I believe that the expectations of people requiring proof aren’t realistic. Geoffrey Moore, in the book Crossing the Chasm describes five types of profiles of technology adopters: Innovators who pursue new concepts aggressively; early adopters who pursue new concepts very early in their lifecycle; the early majority wait and see before buying into a new concept; the late majority who are concerned about their ability to handle a new concept should they adopt it; and laggards who simply don’t want anything to do with new approaches. Figure 1 depicts Moore’s chasm with respect to agility.

Figure 1. Crossing the Agile Chasm.

People who fit the innovator or early adopter profile are comfortable with reading a web page or book describing agile techniques, they’ll think about the described concept for a bit and then tailor it for their environment. This is the spot in the lifecycle that most agile techniques are currently at, and it is these types of organizations that are readily adopting these new techniques. The early majority will wait for sufficient anecdotal evidence to exist before making the jump to agile software development, and we’re starting to see adoption by some of these organizations now.

When I first wrote this article in 2003 we were very likely on the left-hand side of the chasm. In February 2006 I wrote that I believed that we’re on the right-hand side, in other words we’ve crossed the chasm. Unfortunately there are no hard and fast rules to determine if we’ve crossed or not, only hindsight several years from now will do it. My guess is that we’ve definitely crossed over.

Unfortunately, the question “where is the proof” is typically asked by organizations that fit the late majority or even laggard profiles, as Figure 1 indicates. Because agile techniques clearly aren’t at that stage in their lifecycle yet I believe that this question simply isn’t a fair one at this time. Note that there’s nothing wrong if your organization fits into either one of these profiles, many organizations are very successful following these business models, the important thing is that you recognize this and act accordingly.

3. We’re spoiled. previous work within the software metrics field may have set the “proof bar” too high. In the book Software Assessments, Benchmarks, and Best Practices Caper Jones of Software Productivity Research presents a wealth of information gathered over decades pertaining to software development techniques and technologies. In Table 5.4 of that book Jones presents the effectiveness of various development techniques, the most effective one is the reuse of high-quality deliverables with a 350% adjustment factor whereas quality estimating tools provides a 19% adjustment factor and use of formal inspections a 15% adjustment factor. Table 5.5 goes on to list negative adjustment factors. For example, crowded office space gives a -27% adjustment factor, no client participation -13% adjustment factor, and geographic separation a -24% adjustment factor. My point is that current “best” and worst practices have been examined in detail, but newly proposed techniques such as refactoring and co-location with your customers have not been well studied. To compound this problem the vast majority of agile methodologists are practitioners who are actively working on software teams and whom presumably have little time to invest in theoretical study of their techniques. In other words, it may be a long while until we see studies comparable to those of Capers Jones performed regarding agile techniques.

4. It may not be clear what actually needs to be proved regarding agile software development . In Chapter 3 of Agile Software Development Ecosystems Jim Highsmith observes: “Agile approaches excel in volatile environments in which conformance to plans made months in advance is a poor measure of success. If agility is important, then one of the characteristics we should be measuring is that agility. Traditional measures of success emphasize conformance to predictions (plans). Agility emphasizes responsiveness to change. So there is a conflict because managers and executives say that they want flexibility, but then they still measure success based on conformance to plans. Wider adoption of Agile approaches will be deterred if we try to “prove” Agile approaches are better using “only” traditional measures of success.”

When it comes to measurements, agilists have a different philosophy than traditionalists. We believe that metrics such as cost variance, schedule variance, requirements variance, and task variance are virtually meaningless (unless of course you’re being paid to track these things, ca-ching!). Instead, agilists measure success through the regular delivery of high-quality, working software. Doesn’t it make sense to measure the success of software development efforts by the delivery of working software?

5. Perhaps the motivations of the people asking for proof aren’t appropriate. Are they really interested in finding an effective process or are merely looking for a reason to disparage an approach that they aren’t comfortable with? Are they realistic enough to recognize that no software process is perfect, that there is no silver bullet to be found? Are they really interested in proof that something works, or simply an assurance of perceived safety? Which is strange, because if something worked for someone else it doesn’t mean that it will work for them.

“First they ignore you, then they laugh at you, then they fight you, then you win.” – Mahatma Gandhi

Another interesting observation is that that many of the people who tell you that Agile doesn’t work confuse “code and fix” approaches with Agile approaches. This is likely because there isn’t accepted criteria for determining whether a team is agile, so it’s very difficult for them to distinguish accurately because they don’t know what they’re looking at.

6. There is growing evidence from the research community. Recently books such as Pair Programming Illuminated (Addison Wesley, 2002) and Extreme Programming Perspectives (Addison Wesley, 2002) have published some proof that agile techniques work. The first book explores pair programming, an XP practice, and confirms the anecdotal evidence that pair programming is in fact an incredibly good idea. The second book is a collection of papers presented at two agile conferences held in 2002, many of which describe initial research into agile techniques as well as describe case studies. This book is a very good start at answering the proof question, and I eagerly await similar efforts in the near future (so keep your eye out for them).

7. The anecdotal evidence looks pretty good. There is currently significant anecdotal evidence that agile software development techniques work, you merely need to spend some time on newsgroups and mailing lists to discover this for yourself. If anecdotal evidence isn’t sufficient then agile software development processes aren’t for you… yet!

8. There is significant evidence that iterative and incremental development works. Craig Larman, in Chapter 6 of his book Agile and Iterative Development: A Manager’s Guide summarizes a vast array of writings pertaining to both iterative and incremental (I&I) development. He cites noted software thought leaders such as Harlan Mills, Barry Boehm, Tom Gilb, Tom Demarco, Ed Yourdon, Fred Brooks, and James Martin regarding their experiences with I&I development. More importantly he discusses extensive studies into the success factors of software development. He makes a very good argument that proof exists that shows that many of the common practices within agile software development do in fact work, practices such as incremental delivery and iterative approaches which embrace change. He also cites evidence which shows that serial approaches to development, larger teams, and longer release cycles lead to greater incidences of failure. I highly recommend this book for anyone interested in building a case for agile software development within their organization.

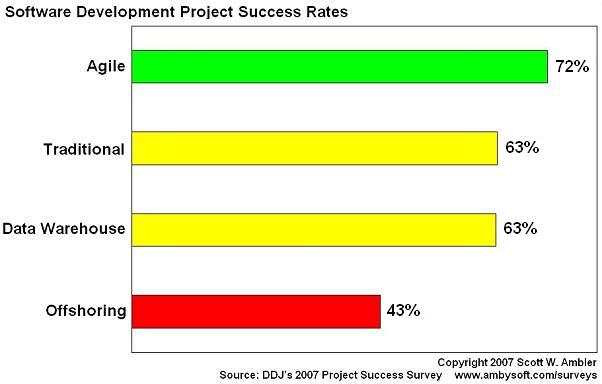

9. There is growing survey evidence that agile works better than traditional. The DDJ 2007 Project Success Survey showed that when people define success in their own terms that Agile projects had a 72% success rate, compared with 63% for traditional and 43% for offshoring. These figures are summarized in Figure 2. The DDJ 2008 Agile Adoption Survey showed that people’s experience with agile software development was very positive, see Figure 3, and that adopting agile strategies appears to be very low-risk in practice (few organizations seem to be running into serious problems, and most are clearly benefitting). For the 2010 IT Project Success Rates Survey we adopted some ideas from the Chaos Report and starting talking in terms of success, challenged, and failed. Results from the 2013 IT Project Success Rates Survey Results are depicted in Figure 4 and as you can see agile still outperforms traditional approaches.

Figure 2. Project success rates (2007).

Figure 3. Effectiveness of agile software development compared with traditional approaches.

Figure 4. Project success rates (2013).

10. There is growing survey evidence that agile approaches work as well or better than traditional approaches at scale. I’ve also run surveys which explored success rates by paradigms at different levels of scale. At this point I’ve only explored two of the scaling scaling factors of the Software Development Context Framework (SDFC), in this case team size (see Figure 5) and geographic distribution (see Figure 6) but these two factors tend to be the ones people are most interested in. The July 2010 State of the IT Union survey explored the issue of team size and as you can see in Figure 5 agile approaches do as well or better than traditional approaches regardless of team size. The 2008 IT Project Success Rates Survey explored the issue of geographic distribution and as Figure 6 shows agile approaches do as well or better regardless of the level of team distribution.

Figure 5. Comparing paradigm success rates by team size.

Figure 6. Comparing paradigm success rates by geographic distribution.

11. Shorter feedback cycles lead to greater success. Figure 7 maps the feedback cycle of common development techniques (for the sake of brevity not all techniques are shown) to the cost curve.

Agile techniques, such as Test Driven Design (TDD), pair programming, and Agile Model Driven Development (AMDD) all have very short feedback cycles, often on the order of minutes or hours. Traditional techniques, such as reviews, inspections, and big requirements up front (BRUF) have feedback cycles on the order of weeks or months, making them riskier and on average more expensive.

Figure 7. Mapping common techniques to the cost of change curve.